Congress will not block state AI regulations. This is what it means for consumers

After months of debate, plans to block state restrictions in Congress artificial intelligence It was withdrawn from the big federal budget bill this week. The proposed 10-year moratorium would have prevented states from enforcing AI rules and laws if the state accepted federal funds for broadband access.

The issue has split between technology experts and politicians, with some Senate Republicans joining Democrats to oppose the move. The Senate eventually voted 99-1 to remove the proposal from the bill. This includes the extension of the 2017 federal tax cuts Reducing services like Medicaid and snap. Congressional Republican leaders say they want to take action on President Donald Trump’s desk by July 4th.

High-tech companies and many Congressional Republicans supported the moratorium, saying it would hinder the “patchwork” of state and local government rules and regulations that could hinder AI development, particularly in the context of competition with China. Critics, including consumer advocates, said the state should have free hands to protect people from the potential issues of rapidly growing technology.

“The Senate was getting together tonight and saying we can’t get past the good state consumer protection laws,” Sen. Maria Cantwell, a Washington Democrat, said in a statement. “The nation can fight robocall, fight deepfakes and provide safe autonomous vehicle laws. This will also allow us to work together nationwide to provide a new federal framework on artificial intelligence that will accelerate American leadership in AI while protecting consumers.”

Despite the moratorium being drawn from the bill, debate about how governments can properly balance consumer protection and support for innovation may continue. “There was a lot of debate at the state level, and I think it’s important to approach this issue at multiple levels,” said Anjana Susarla, a professor at Michigan State University who studies AI. “You can approach it at the national level. You can approach it at the state level too. I think you need both.”

Several states have already begun adjusting AI

The proposed moratorium would have banned the state from enforcing regulations, including those already listed in the book. The exceptions are rules and laws that facilitate AI development, and rules and laws that apply the same standards to non-AAI models and systems that do the same thing. These types of regulations are already beginning to pop up. The biggest focus is not the US, but Europe, which is already undertaken by the European Union. AI standards. However, the nation is beginning to get caught up in action.

Colorado I handed the set Of last year’s consumer protection, it is expected to come into effect in 2026. California has adopted more than a dozen AI-related recruitments Last year’s law. Other states have laws and regulations that often deal with certain issues. Deep fake etc. Alternatively, ask the AI developer to publish information about their training data. At the local level, some regulations also address potential employment discrimination when AI systems are used for employment.

Arsene Kourinian, partner at law firm Meyer Brown, said: So far, in 2025, state legislators have at least introduced it. 550 Proposals According to a national meeting of state legislatures, around AI. At a House Committee hearing last month, Rep. Jay Obernolte, a Republican from California, expressed his desire to go beyond more state-level regulations. “We have a limited legislative runway to ensure that the state can resolve that issue before it goes too far,” he said.

read more: AI Essentials: 29 Ways to Make Gen AI Work for You, according to our experts

Some states have book laws, but not all of them have come into effect or seen enforcement. This limits the potential short-term impact of the moratorium, says Coven Zwell Keegan, managing director of Washington. App. “There is no execution yet.”

The moratorium is likely to block state lawmakers and policymakers from developing and proposing new regulations, Zweifel Keegan said. “The federal government will be the only major potential regulator around the AI system,” he said.

What does the state’s AI regulations mean?

AI developers are demanding guardrails placed in their work to be consistent and streamlined.

“As an industry and as a country, whatever it is, we need one clear federal standard,” Alexandr Wang, founder and CEO of data company Scale AI, told lawmakers. April hearing. “But we need to be clear about one federal standard and there’s a preemptive measure to prevent this outcome, with 50 different standards.”

During the Senate Commerce Committee May hearingOpenai CEO Sam Altman told Sen. Ted Cruz, a Republican from Texas, that the EU-style regulatory system is “disastrous” for the industry. Instead, Altman suggested that the industry develop its own standards.

Sen. Brian Schatz, a Hawaii Democrat, said Altman thought some guardrails would be good if the industry’s self-regulation was sufficient at this point, but “it’s gone too far.” (Disclosure: CNET’s parent company Ziff Davis filed a lawsuit against Openai in April, claiming it infringed Ziff Davis’ copyright in training and operating AI systems.)

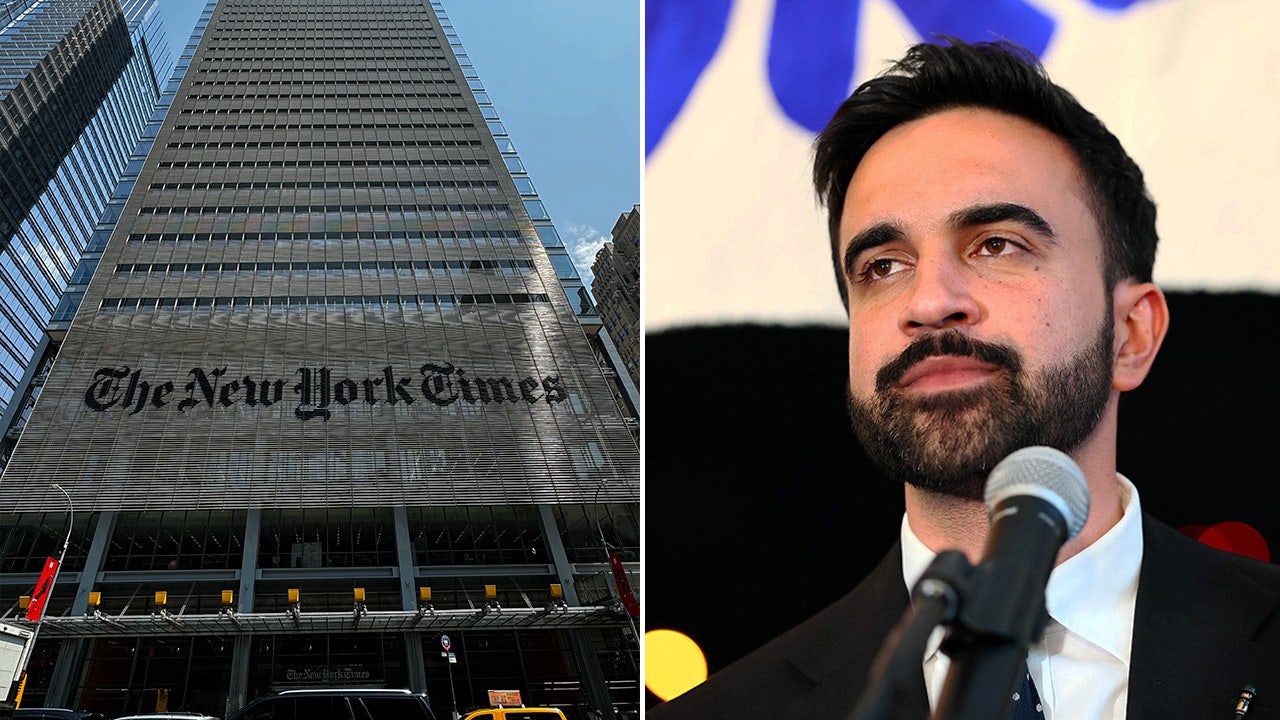

However, not all AI companies support moratoriums. in New York Times OperationCEO of humanity, Dario Amodei, calls it “too dull” and says instead the federal government should create transparency standards for AI companies. “Having this national transparency standard will help not only the public but also the Congress understand how technology is developed, allowing lawmakers to decide whether further government action is necessary.”

The proposed 10-year suspension of state AI laws is now in the hands of the US Senate. There, Commissions on Commercial, Science and Transport have already held hearings on artificial intelligence.

Concerns from both developers who create AI systems and “deployers” who use them in their interactions with consumers often stem from fears that states will require important tasks such as impact ratings and transparency notices before the product is released, Kourinian said. Consumer advocates say more regulations are needed and potentially hindering state capabilities could undermine user privacy and safety.

Kourinian said suspensions on certain state rules and laws could result in more consumer protection issues being addressed by courts or the state attorney general. Existing laws regarding unfair and deceptive practices inherent in AI still apply. “Time tells us how judges interpret these issues,” he said.

Suzara said the spread of AI across the industry means that states could potentially be able to regulate issues such as privacy and transparency more widely without focusing on technology. However, suspensions on AI regulations could lead to such policies being bound by lawsuits. “We need to find some kind of balance between ‘I don’t want to stop innovation’, but we need to realize that there may be real consequences,” she said.

According to Zweifel-Keegan, many policies regarding the governance of AI systems occur due to these so-called technology-independent rules and laws. “It’s worth remembering that there are a lot of existing laws, and it could create new laws that don’t cause moratoriums, but apply to AI systems as long as they apply to other systems,” he said.

What’s next for federal AI regulations?

One of the key lawmakers pushing for the removal of suspensions from the bill was Sen. Marsha Blackburn, a Tennessee Republican. Blackburn said she wants to ensure that the state can protect children and creators, like the country musicians she is famous for. “Until Congress passes federal preemptive laws like the Online Children Safety Act and the Online Privacy Framework, the nation cannot prevent the nation from becoming a gap in order to protect vulnerable Americans, including Tennessee creators and valuable children, from harm,” she said in a statement.

The group opposed the preemption of state law, said the next move from Congress would be to take steps towards actual restrictions on AI, making state law unnecessary. If tech companies are “seeking the federal preemption, they should seek the federal preemption along with federal laws that provide road rules,” Jason Van Beek, Chief Executive Officer of the Future of Life Institute, told me.

Ben Winters, director of AI and data privacy for the American Consumer Federation, said Congress could resume its idea of separating state laws again. “Basically, it’s just a bad idea,” he told me. “It doesn’t necessarily matter if it’s happening in the budget process.”